TrustMap Framework

Discover how the TrustMap framework ensures ethical, transparent, and trustworthy deployment of Generative AI and Large Language Models (LLMs). A 2025 research-based review on AI governance and responsible innovation.

📌 Authors: Sarah Kristin Lier, Leon Nowikow, Michael H. Breitner

📅 Published: October 2025

📖 Conference: ICIS 2025 Proceedings

What is TrustMap in Generative AI?

TrustMap is a four-phase process model proposed by researchers in 2025 to evaluate and ensure the trustworthiness of Generative AI systems, especially Large Language Models (LLMs). It integrates ethical principles, regulatory standards, and real-world implementation strategies into a repeatable monitoring framework.

Overview: Why Trust in GenAI Matters

The rise of Generative Artificial Intelligence (GenAI), including popular Large Language Models (LLMs) like GPT-4, Claude, and Gemini, has reshaped how businesses and users interact with AI. Yet, this widespread adoption brings critical concerns:

- Data misuse

- Disinformation

- Overreliance on AI outputs

- Discrimination and bias

This 2025 research paper addresses a timely and essential question:

👉 How can we design GenAI systems that people can trust?

For More..Review: AI-Driven Cancer Nanotherapy Using Intelligent Nanoplatforms

Research Objectives

The authors aim to:

- Define requirements for Trustworthy AI (TAI) in the GenAI context.

- Consolidate current ethical, legal, and technical standards.

- Propose a practical implementation model the TrustMap for developers, businesses, and policymakers.

For More..The AI Boom: Innovation or Just Another Bubble?

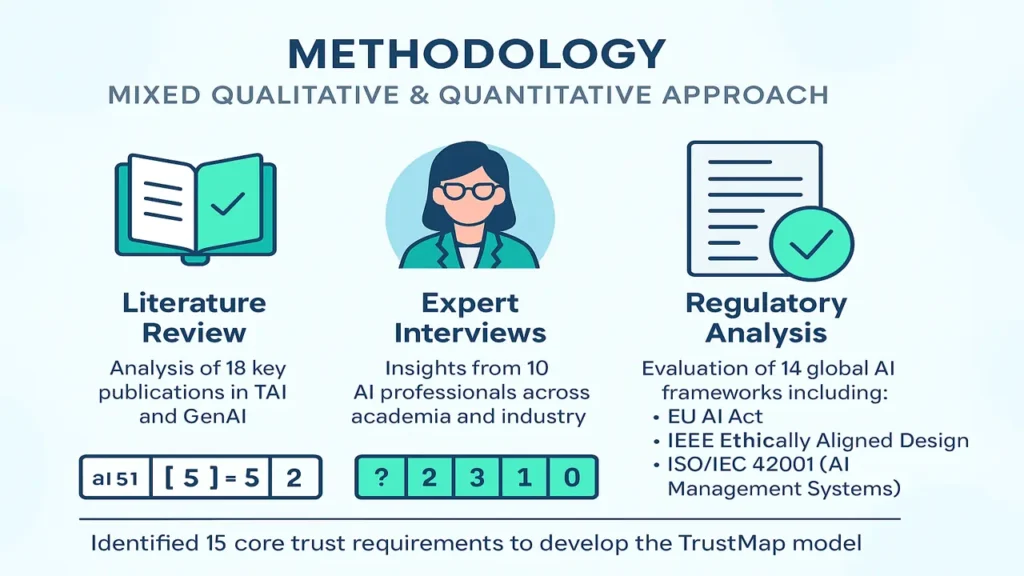

Methodology: Mixed Qualitative & Quantitative Approach

The study is built on a strong triangulation method:

- Literature Review – Analysis of 18 key publications in TAI and GenAI.

- Expert Interviews – Insights from 10 AI professionals across academia and industry.

- Regulatory Analysis – Evaluation of 14 global AI frameworks including:

- EU AI Act

- IEEE Ethically Aligned Design

- ISO/IEC 42001 (AI Management Systems)

From these, the authors identified 15 core trust requirements, which they used to design the TrustMap model.

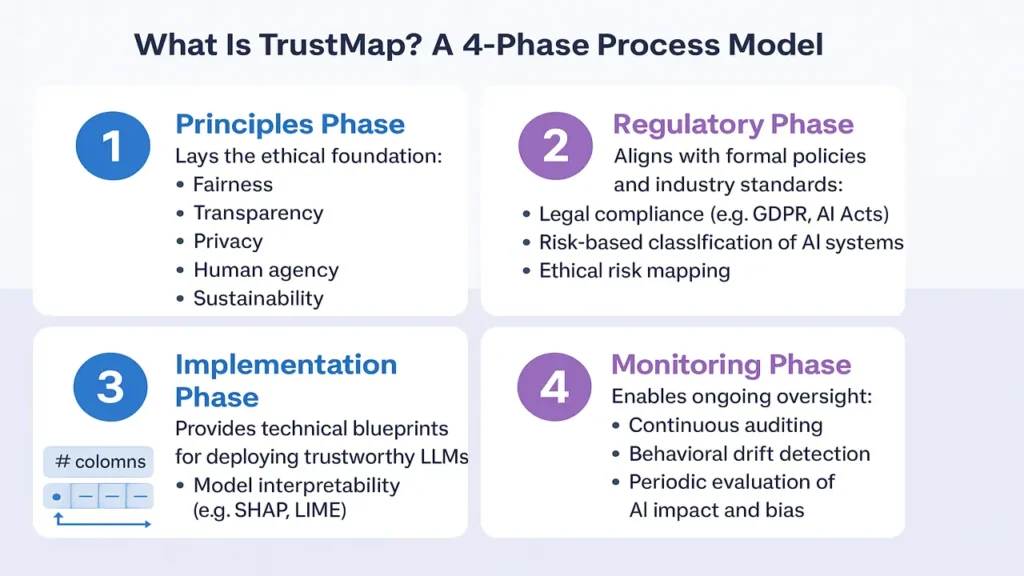

What Is TrustMap? A 4-Phase Process Model

Principles Phase

Lays the ethical foundation:

- Fairness

- Transparency

- Privacy

- Human agency

- Sustainability

Regulatory Phase

Aligns with formal policies and industry standards:

- Legal compliance (e.g., GDPR, AI Acts)

- Risk-based classification of AI systems

- Ethical risk mapping

Implementation Phase

Provides technical blueprints for deploying trustworthy LLMs:

- Model interpretability (e.g., SHAP, LIME)

- Guardrails against hallucinations

- Human-in-the-loop feedback mechanisms

Monitoring Phase

Enables ongoing oversight:

- Continuous auditing

- Behavioral drift detection

- Periodic evaluation of AI impact and bias

Why This Paper Matters

This is one of the first structured models that:

- Applies TAI frameworks specifically to LLMs

- Connects regulatory compliance directly to technical design

- Offers practical steps for implementation and monitoring

- Supports multi-stakeholder governance, from developers to regulators

Use Cases for the TrustMap Model

- AI Startups: Design safer generative models from day one

- Enterprises: Audit internal AI systems for ethical and regulatory compliance

- Policy Makers: Build guidelines based on a robust academic foundation

- Academics: Use it as a springboard for further research in explainable and ethical AI

Comparison with Other Trust Models

| Feature | TrustMap (2025) | EU AI Act | NIST AI RMF |

|---|---|---|---|

| Specific to GenAI | Yes | No | No |

| Includes ethical & regulatory phases | Yes | Partial | Partial |

| Technical guidance | Yes | No | Limited |

| Monitoring tools | Yes | No | Yes |

Final Thoughts

This 2025 study makes a compelling case for bridging the gap between AI ethics and practice. As Generative AI becomes foundational in decision-making, the TrustMap offers a valuable roadmap for building, deploying, and auditing AI responsibly.

💡 If you’re in AI development, policy, or research, this model deserves your attention.

![Top 10 Ways AI Automation for Business Is Transforming the Future [2025 Edition]](https://electuresai.com/wp-content/uploads/2025/12/A_digital_illustration_serves_as_the_header_image__featured_converted-768x432.webp)