Activation functions are a core part of Deep Learning because they allow neural networks to learn complex, non-linear patterns. Without activation functions, every neural network would behave like a linear regression model, no matter how many layers it had.

In simple terms:

Activation functions give neural networks their “intelligence.”

Why Do We Need Activation Functions?

Neural networks learn by transforming inputs through weighted connections. If no activation function is applied, the entire network becomes:

Linear combination → linear combination → linear combinationBut real-world problems like image classification, speech recognition, and language understanding are non-linear.

Without activation functions:

Neural networks cannot learn complex boundaries

Deep layers become useless

The entire model collapses into a single linear function

With activation functions:

Neural networks learn curves, edges, colors

Handle complex decision boundaries

Work for classification, vision, NLP, reinforcement learning

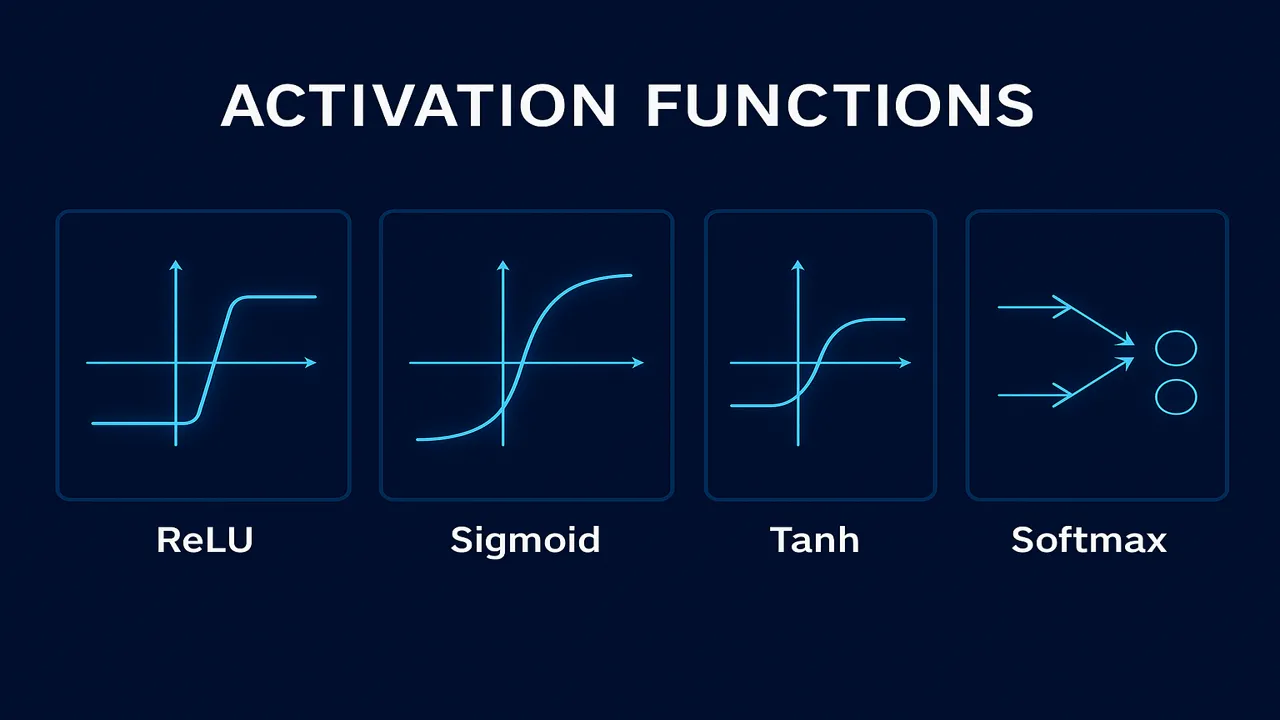

Types of Activation Functions

Below are the most widely used functions in modern deep learning.

Sigmoid Function

Formula:

σ(x) = 1 / (1 + e^(-x))Range: 0 to 1

Use cases:

- Binary classification

- Output layer of logistic regression

Problems:

- Vanishing gradients

- Slow training

Example:

If x = 2

σ(2) = 0.88 → very confident “yes”.

Tanh Function

Range: -1 to 1

Better than sigmoid because centered around zero.

Use cases:

- Hidden layers in older RNNs

- Sentiment signals (negative/positive)

Problem:

Still suffers from vanishing gradient.

https://developers.google.com/machine-learning

ReLU (Rectified Linear Unit)

Formula:

ReLU(x) = max(0, x)Why it’s the king:

Fast training

No vanishing gradient (for x > 0)

Works in almost every modern model

Use cases:

- CNNs (image processing)

- Dense layers

- Transformers

Problem:

- “Dying ReLU” where neurons get stuck at zero.

Leaky ReLU

Fixes dying ReLU:

f(x) = x (if x > 0)

f(x) = 0.01x (if x < 0)Use cases:

- Deep CNNs

- GANs

Softmax Function

Used for multi-class classification.

Formula:

softmax(x_i) = e^(x_i) / Σ e^(x_j)Turns raw scores → probabilities (sum = 1)

Use Case:

- Output layer of image classifiers (e.g., 10 digits)

Step-by-Step Example (Softmax)

Suppose a model outputs raw scores:

[2.0, 1.0, 0.1]Compute exponential values:

e^2.0 = 7.38

e^1.0 = 2.71

e^0.1 = 1.10Sum = 11.19

Softmax probabilities:

Class A: 7.38 / 11.19 = 0.66

Class B: 2.71 / 11.19 = 0.24

Class C: 1.10 / 11.19 = 0.10When to Use Which Activation?

| Activation | Best For | Avoid When |

|---|---|---|

| Sigmoid | Binary output | Deep networks |

| Tanh | RNNs | High depth |

| ReLU | CNNs, Transformers | Dying ReLU risk |

| Leaky ReLU | GANs | Rarely needed |

| Softmax | Multi-class output | Hidden layers |

Summary

Activation functions convert linear neurons into powerful non-linear decision makers.

Choosing the correct activation is crucial for fast training and good accuracy.

People also ask:

Activation functions are mathematical operations that add non-linearity to neural networks.

ReLU trains faster and avoids vanishing gradients, making it more efficient for deep models.

Softmax for multi-class, Sigmoid for binary classification.

Yes a wrong activation can slow training or completely ruin performance.

ReLU for hidden layers, Softmax/Sigmoid for outputs.