Introduction

Recurrent Neural Networks (RNNs) struggle with long-term memory because gradients either explode or vanish during backpropagation. LSTM Networks were designed to fix this weakness, making them one of the most important architectures in modern deep learning.

In this lecture, you’ll learn:

- What LSTMs are

- Why they outperform simple RNNs

- Internal gate mechanics

- Memory cell architecture

- How information flows through time

- Real-world use cases

- Implementation examples

What Are LSTM Networks?

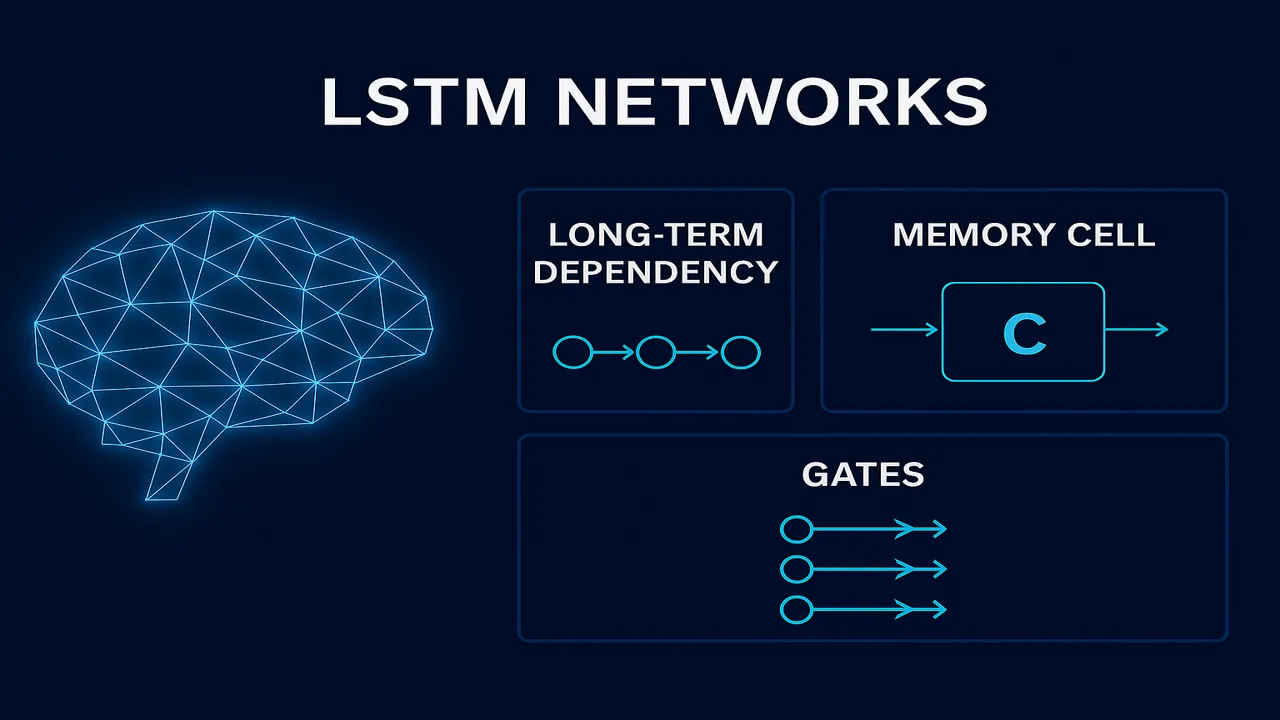

Long Short-Term Memory (LSTM) is a specialized recurrent neural network architecture that can learn long-range dependencies by controlling how information is stored, updated, or forgotten over time.

While traditional RNNs pass information from step to step, LSTMs add a memory cell + 3 gates that regulate the flow of information.

Why Do We Need LSTMs? (The Problem With RNNs)

RNNs fail because gradients shrink as they move backward through many time steps known as vanishing gradient problem.

This makes RNNs forget older information.

LSTMs solve this by providing gated pathways that allow long-term memory storage.

LSTM Architecture Overview

Each LSTM unit contains:

Forget Gate (ft)

Decides what previous information to remove.

If ft ≈ 0 → forget

If ft ≈ 1 → keep

Input Gate (it)

Determines what new information should enter memory.

Candidate Memory (C̃t)

A new potential memory vector created through tanh function.

Memory Update (Ct)

Old memory + new memory combined.

Output Gate (ot)

Controls what part of memory becomes output.

Lecture 10 – Recurrent Neural Networks (RNNs) Architecture, Types & Applications

Mathematical Formulation (Simple & Clear)

Forget Gate:

ft = σ(Wf·[ht-1, xt] + bf)

Input Gate:

it = σ(Wi·[ht-1, xt] + bi)

C̃t = tanh(Wc·[ht-1, xt] + bc)

Memory Update:

Ct = ft ⊙ Ct-1 + it ⊙ C̃t

Output Gate:

ot = σ(Wo·[ht-1, xt] + bo)

Hidden State:

ht = ot ⊙ tanh(Ct)

This formula preserves memory over long sequences.

Advantages of LSTMs

Learns long-term dependencies

Avoids vanishing gradients

Works well on sequential data

Powerful for time-series prediction

Stable during training

Supports variable-length sequences

TensorFlow LSTM Guide

https://www.tensorflow.org/api_docs/python/tf/keras/layers/LSTM

Real-World Applications of LSTM Networks

Speech Recognition

Predicts next audio frame from previous context.

Text Generation & NLP

Used for chatbots, language modeling, sentiment analysis.

Music Generation

LSTMs learn musical patterns and generate new sequences.

Stock Market Forecasting

Predicts future price trends using past data.

Healthcare Time-Series

Used in ECG analysis, patient monitoring patterns.

Example: Predicting Next Word Using LSTM

Dataset: IMDB Movie Reviews

Task: Predict the next word

Model: Keras LSTM

Code:

model = Sequential()

model.add(Embedding(vocab_size, 128))

model.add(LSTM(128))

model.add(Dense(vocab_size, activation='softmax'))

model.compile(

loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy']

)Summary

LSTM Networks solve the vanishing gradient problem by using gates and a memory cell.

They are powerful for sequence prediction, language modeling, speech analysis, and time-series forecasting.

People also ask:

They maintain long-term memory using gated architecture.

Yes, especially for real-time prediction and small datasets.

Yes much better than basic RNNs.

Sigmoid for gates, tanh for memory transformation.

Absolutely they are one of the most effective architectures for it.