Concept Learning is one of the foundational theoretical frameworks in Machine Learning. It explains how a learner identifies a target concept by analyzing examples, forming hypotheses, and gradually narrowing down what is correct and what is impossible. This lecture provides a detailed and intuitive understanding of hypothesis space, general-to-specific ordering, version spaces, and the Candidate Elimination Algorithm, supported with a real-world example.

What Is Concept Learning?

Concept Learning is the task of inferring a general rule (concept) from observed, labeled training examples.

It answers a simple question:

“Given some examples, what rule correctly classifies them?”

Concept learning treats ML as a search problem:

the learner searches through many possible hypotheses and identifies those consistent with the data.

This theoretical foundation is essential for understanding advanced ML algorithms like Decision Trees, Naive Bayes, SVMs, and Neural Networks.

Hypothesis Space (H)

The hypothesis space is the set of all possible hypotheses that a learner can propose based on the allowed attribute structure.

- A hypothesis (h) is a function that maps inputs to outputs.

- The target concept (c) is the true function the learner is trying to discover.

Example

For a weather prediction problem with attributes such as Outlook, Wind, and Humidity, a hypothesis could be:

h(x): “Play = Yes when Outlook = Sunny AND Humidity = Normal.”

If you can form 1,000 such rules with different combinations of attributes, then your hypothesis space (H) contains 1,000 possible hypotheses.

Why Hypothesis Space Matters

The hypothesis space defines how flexible a model can be.

A larger H can represent more complex concepts, but makes the search harder.

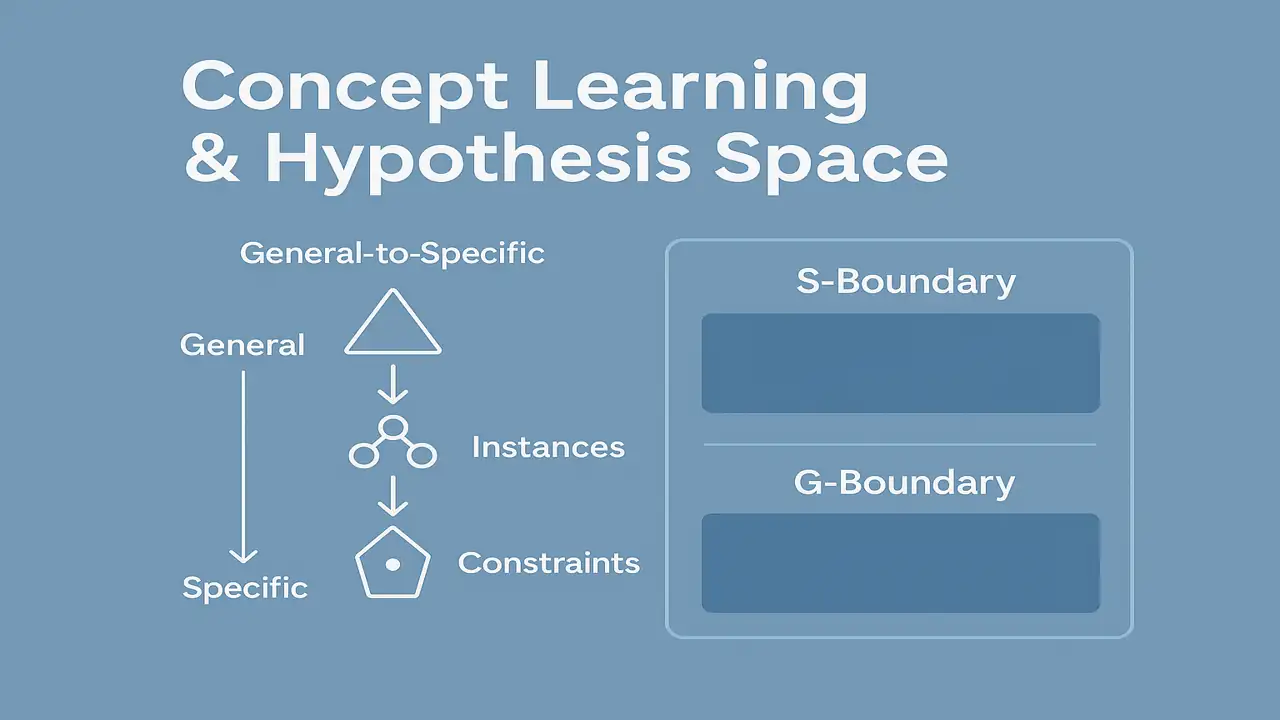

General-to-Specific Ordering

In concept learning, hypotheses are structured in an order ranging from most general to most specific.

Most General Hypothesis

Predicts “Yes” for every instance.

Example:

h(x) = “Always Play.”

Most Specific Hypothesis

Predicts “Yes” only when every attribute exactly matches a single example.

Example:

h(x) = “Play only when Outlook = Sunny AND Wind = Weak AND Humidity = Normal AND Temp = Warm.”

Purpose of This Ordering

- Helps organize the search process

- Guides how hypotheses evolve when new data arrives

- Makes boundary-based learning possible (version space)

General-to-specific ordering is the foundation for the Candidate Elimination Algorithm.

Version Spaces

A Version Space contains all hypotheses that are consistent with the current training data.

As the learner sees more examples:

- Inconsistent hypotheses are removed

- Consistent hypotheses remain

This creates a progressively smaller region of valid rules.

The Version Space has two boundaries:

A. S – The Specific Boundary

The set of most specific hypotheses that still match all positive examples.

B. G – The General Boundary

The set of most general hypotheses that still avoid contradicting negative examples.

Why Version Spaces Are Important

- They represent the “knowledge state” of the learner.

- They shrink over time as more training examples are added.

- If the dataset is learnable, S and G eventually converge to the target concept.

Candidate Elimination Algorithm

The Candidate Elimination Algorithm is a formal method to maintain and update the S and G boundaries as new examples arrive.

This algorithm ensures that all possible consistent hypotheses remain, and all impossible ones are removed.

How the Algorithm Works

Start with:

- S = most specific hypothesis

- G = most general hypothesis

For each training example:

If the example is Positive:

The hypothesis must cover this example.

So:

- Generalize S

- Remove any members of G that do not cover the example

If the example is Negative:

The hypothesis must not cover this example.

So:

- Specialize G

- Remove any members of S that incorrectly cover the example

Outcome

After processing all examples, the S and G boundaries represent the entire version space.

This method guarantees that no inconsistent hypothesis remains.

Practical Example – Learning the Concept of Weekend Picnic Weather

Consider a learner that wants to predict whether the weather is suitable for a weekend picnic.

Attributes:

- Outlook (Sunny, Cloudy, Rainy)

- Wind (Strong, Weak)

- Temperature (Cool, Mild, Hot)

- Humidity (High, Normal)

Training Examples:

| Outlook | Wind | Temp | Humidity | Picnic? |

|---|---|---|---|---|

| Sunny | Weak | Mild | Normal | Yes |

| Cloudy | Weak | Mild | High | Yes |

| Rainy | Strong | Cool | High | No |

| Sunny | Strong | Hot | High | No |

Learning Process:

- Positive examples make S more general

- Negative examples make G more specific

After updates, a possible rule (hypothesis) inside the version space may be:

“Picnic = Yes when Wind = Weak AND (Outlook = Sunny OR Cloudy).”

This is the learned concept of weekend picnic weather.

Summary

Concept Learning provides the theoretical foundation for understanding how machine learning models generalize from examples. By mastering hypothesis space, version spaces, general-to-specific ordering, and the Candidate Elimination Algorithm, students develop a deep understanding of the learning process before advancing to more complex models.

These ideas remain essential in modern ML, especially in explainability, model interpretability, and designing structured learning systems.

People also ask:

The main goal of Concept Learning is to identify a general rule (concept) that correctly classifies examples based on their attributes. It helps a learner understand how hypotheses map input features to output labels.

The hypothesis space (H) is the complete set of all hypotheses that a learner can possibly form using the given attributes. It defines all potential rules that might explain the target concept.

The Specific boundary (S) contains the most specific hypotheses consistent with the data, while the General boundary (G) contains the most general hypotheses that still remain consistent. Together, S and G define the range of all possible valid hypotheses.

The Candidate Elimination Algorithm processes each training example and refines the S and G boundaries. Positive examples cause S to become more general, while negative examples cause G to become more specific. The algorithm eliminates all hypotheses that do not match the data.

Yes. Although modern ML models such as neural networks and decision trees are more advanced, Concept Learning remains essential in understanding how hypotheses are formed, how generalization occurs, and how search spaces are narrowed. It also helps in model interpretability and explainability.