Convolution operations form the core engine of Convolutional Neural Networks (CNNs). Every powerful deep learning model from VGG and ResNet to YOLO and EfficientNet relies on convolution to extract meaningful patterns from images.

A convolution layer identifies edges, textures, colors, shapes, and high-level structures that help the model understand visual data.

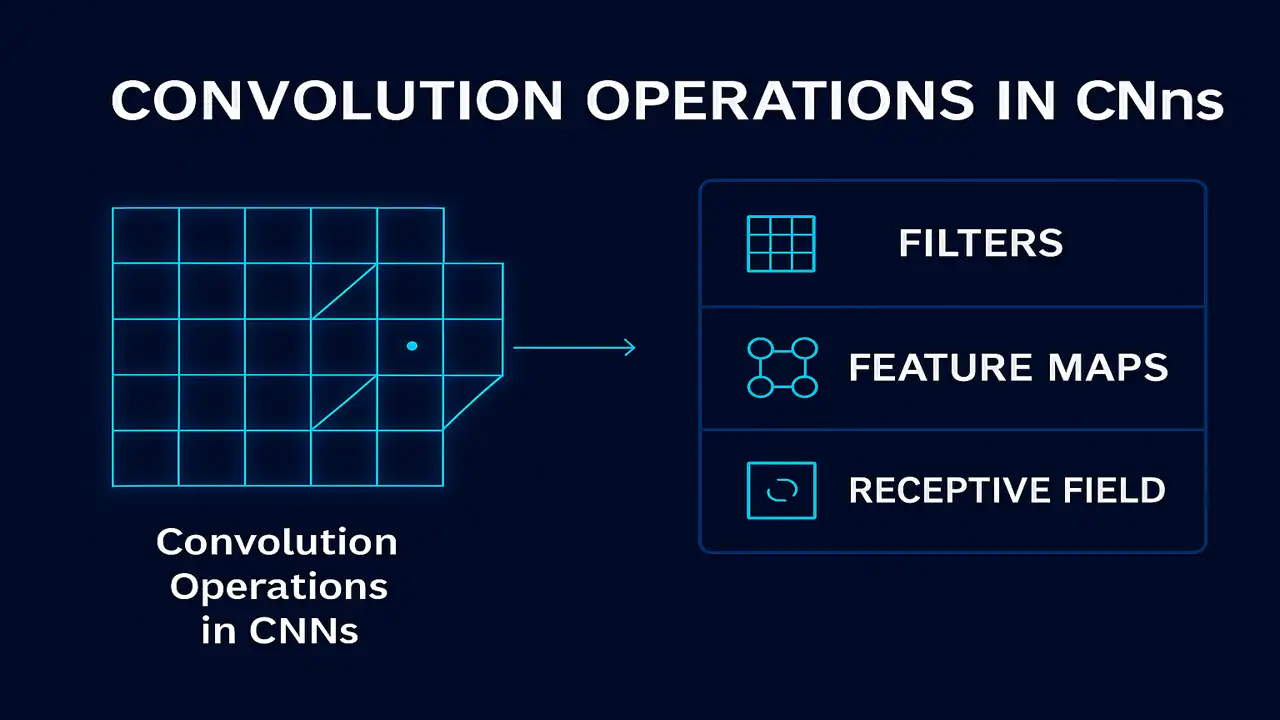

Let’s break down filters, kernels, receptive fields, feature maps, and how convolution actually works.

What Is Convolution?

Convolution is a mathematical operation where a small matrix (kernel/filter) slides across the image and produces a new matrix called a feature map.

CNNs use convolution to detect:

- edges

- patterns

- corners

- textures

- repeated shapes

The same filter moves across the entire image, giving CNNs translation invariance meaning an object can appear anywhere and still be recognized.

Filters (Kernels) in Convolution

A filter is a small matrix like 3×3, 5×5, or 7×7.

Example 3×3 kernel:

| -1 | 0 | 1 |

| -1 | 0 | 1 |

| -1 | 0 | 1 |

This is a Sobel edge detector (horizontal edges).

Different filters detect different features:

- edge filters

- blur filters

- sharpen filters

- texture filters

Each filter represents a specific pattern detector.

How Convolution Works (Step-by-Step)

Let’s use a 3×3 filter on a 5×5 image region.

Step 1 – Select 3×3 patch

Step 2 – Multiply element-wise with filter

Step 3 – Sum all values

Step 4 – Write output in feature map

Step 5 – Slide filter (stride = 1 or 2)

This process repeats until the entire image is processed.

The result is a new feature map highlighting the areas where the filter detected strong responses (edges, patterns, etc.).

Feature Maps

A feature map is the output of a convolution.

If you use 32 filters, you get 32 feature maps.

Early layers detect:

- edges

- curves

- corners

Deep layers detect:

- eyes

- wheels

- patterns

- high-level shapes

Receptive Field

The receptive field describes how much of the input image influences a particular neuron.

Small receptive field:

- captures fine details

Large receptive field:

- captures high-level structures

- essential for understanding context

Receptive field grows with:

- deeper layers

- larger filters

- pooling

- strided convolution

Effect of Stride & Padding on Convolution

Stride = step size

- Stride 1 → high detail

- Stride 2 → downsampling

Padding

- SAME padding preserves size

- VALID padding shrinks size

Together, they control:

- spatial resolution

- computation cost

- receptive field growth

Types of Convolutions

1. Standard Convolution (basic CNN)

Uses full filters on full channels.

2. Depthwise Convolution (MobileNet)

Each filter applies to one channel only → very efficient.

3. Pointwise Convolution (1×1 filters)

Used heavily in:

- ResNet

- YOLO

- EfficientNet

- MobileNet

4. Dilated Convolution

Expands receptive field without increasing computation.

5. Transposed Convolution

Used in:

- image generation

- segmentation

- super-resolution

Lecture 8 – Padding and Stride in CNNs: Complete Guide with Examples

Real-World Example Edge Detection

Given this matrix:

| 10 | 20 | 25 |

| 15 | 35 | 40 |

| 12 | 18 | 22 |

Apply Sobel filter:

| -1 | 0 | 1 |

| -1 | 0 | 1 |

| -1 | 0 | 1 |

Compute convolution:

(10×−1 + 20×0 + 25×1) +

(15×−1 + 35×0 + 40×1) +

(12×−1 + 18×0 + 22×1)

= (−10 + 0 + 25) +

(−15 + 0 + 40) +

(−12 + 0 + 22)

= 15 + 25 + 10

= 50

If output is large → a strong vertical edge exists.

This is how CNNs detect line structures.

Why Convolution Is Better Than Fully Connected Layers

Weight sharing

A single filter is reused across the entire image.

Fewer parameters

Efficient and fast.

Local connectivity

Mimics how visual cortex works.

Spatial structure preserved

Images stay meaningful across layers.

Summary

Convolution operations extract meaningful features from images using filters that slide across the input. They produce feature maps, build receptive fields, and form the foundation of all CNN-based models.

People also ask:

A small matrix that detects patterns like edges and textures.

The feature map becomes smaller, and computation becomes faster.

Because each filter learns to detect different features.

It indicates how much of the input affects a specific neuron.

Because it preserves spatial patterns and uses fewer parameters.