This lecture explains Data Preprocessing in Data Mining with in-depth coverage of data cleaning, handling missing values, integration, transformation, reduction, diagrams, case studies, and Python examples ideal for BS AI, BS CS, BS IT & Data Science students.

Data Preprocessing is one of the most critical phases in Data Mining and Machine Learning. Real-world data is rarely perfect. It is incomplete, inconsistent, noisy, duplicated, and often scattered across different sources. If you feed such “dirty” data into any mining algorithm, the results will be unreliable and misleading. That’s why over 60–80% of a data scientist’s time is spent on preprocessing alone.

In this lecture, you will explore how data is prepared before applying mining techniques. You’ll learn how to clean data, merge multiple sources, transform variables, reduce dimensionality, and prepare datasets for classification, clustering, prediction, and pattern discovery.

Introduction to Data Preprocessing

Why Preprocessing Is Essential

Data Mining algorithms expect clean and well-structured data. Preprocessing ensures that:

- Analytical results are accurate

- Models perform efficiently

- Hidden patterns become visible

- Data is consistent and complete

Without preprocessing, even the best algorithms fail.

What Happens If Preprocessing Is Ignored

If we ignore preprocessing:

- Missing values break algorithms

- Outliers distort predictions

- Duplicates inflate results

- Inconsistent values cause confusion

- Noise hides meaningful patterns

Real-World Motivation

Consider:

- Hospital records with missing test results

- Online store logs missing purchase timestamps

- Financial transaction data containing fake/duplicate entries

Preprocessing is the backbone of real-world data analytics.

Types of Data Quality Issues

Missing Values

Caused by:

- Human mistakes

- Sensor failure

- System errors

- Data corruption

Example:

Height | Weight

170 | 65

165 | ?

180 | 72Noise & Outliers

Noise = random error

Outlier = unusually extreme value

Example outlier:

Customer Age: 20, 21, 19, 18, 97Inconsistent Data

Example:

“Male, female”

“F, M”

“m, f”

Duplicate Records

Occurs in:

- CRM databases

- Online forms

- Web analytics

Data Cleaning Techniques

Handling Missing Data

Techniques:

- Mean/Median imputation

- Mode for categorical values

- Forward/Backward fill

- Predictive filling (KNN Imputer, Regression)

Example (Before/After)

Before:

Age: 25, 27, ?, 30After (Mean=27):

Age: 25, 27, 27, 30Detecting & Removing Outliers

Methods:

- Statistical (Z-score, IQR)

- Visualization (Boxplot)

- Domain rules

Example: Z-score

If Z > 3, mark as outlier.

Smoothing Noisy Data

Techniques:

- Binning

- Regression smoothing

- Moving averages

Resolving Inconsistencies

Methods:

- Standardizing formats

- Using lookup tables

- Applying validation rules

Stanford CS → https://cs.stanford.edu

Data Integration

Combining Multiple Data Sources

Sources include:

- SQL databases

- CSV files

- Cloud storage

- APIs

Schema Integration

Mapping:

Prod_ID ↔ ProductID

Cust_ID ↔ CustomerID

Entity Resolution

Determining whether two entries refer to the same person.

Example:

Ali Ahmad: 03211234567

A. Ahmad: 0321 1234567Data Transformation Techniques

Normalization

Scaling values to 0–1 range.

Formula:

(x - min) / (max - min)Example:

Original “Salary” values:

20000, 50000, 100000Normalized:

0.0, 0.375, 1.0Standardization

Mean = 0

Std dev = 1

Used in:

- SVM

- Logistic regression

Aggregation

Combining values to reduce granularity:

- Hourly → Daily

- Daily → Weekly

Attribute Construction

Creating new meaningful features.

Example:

BMI = Weight(kg) / Height(m)^2Data Reduction Techniques

Dimensionality Reduction

Used for:

- Images

- Genetic data

- High-dimensional vectors

Techniques:

- PCA

- t-SNE

- Autoencoders

Feature Selection

Choosing the most useful attributes.

Methods:

- Filter methods

- Wrapper methods

- Embedded methods (Lasso)

Lecture 2 – Types of Data and Patterns in Data Mining

Sampling

Selecting a smaller representative dataset.

Data Cube Aggregation

Used in OLAP and business analytics.

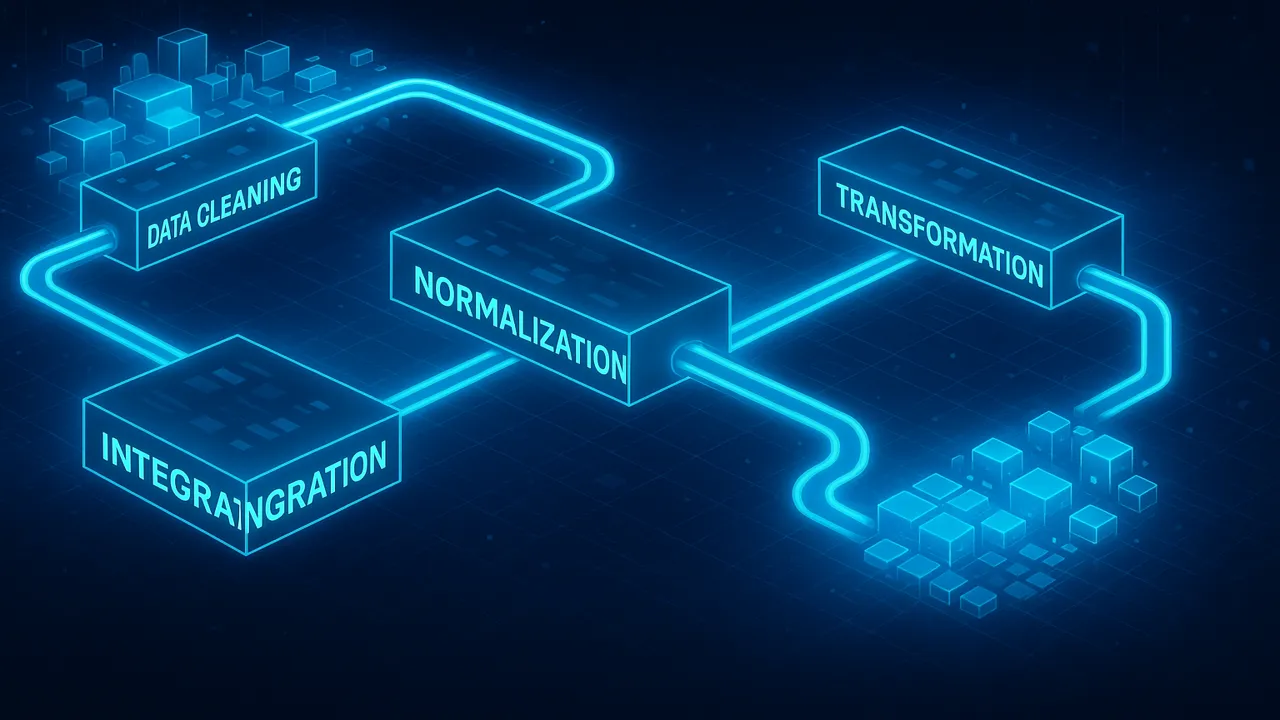

Data Preprocessing Pipeline

[Raw Data]

↓

[Cleaning]

↓

[Integration]

↓

[Transformation]

↓

[Reduction]

↓

[Final Data for Mining]Normalization Example (Before/After)

Original age: 10, 20, 30, 40, 50

Normalized: 0.0, 0.25, 0.50, 0.75, 1.0Practical Examples & Case Studies

E-Commerce Example

Raw Issues:

- Missing prices

- Duplicate orders

- Text-based dates

- Outlier quantities

Preprocessing Steps:

- Remove duplicates

- Convert dates to uniform format

- Fill missing prices

- Detect abnormal purchases

Result:

Cleaner dataset for prediction and recommendation.

Healthcare Case

Raw Issues:

- Missing records

- Noisy sensor data

- Duplicate patient IDs

Preprocessing Steps:

- Interpolation for missing vitals

- Smoothing ECG signals

- Entity matching for patient records

Banking & Fraud Detection

Issues:

- Extremely skewed data

- Hidden outliers

- Temporal patterns

Preprocessing:

- SMOTE

- Time-window grouping

- Outlier suppression

Python Examples for Preprocessing

Handling Missing Values

import pandas as pd

df = pd.read_csv("data.csv")

df.fillna(df.mean(), inplace=True)Standardization

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

scaled = scaler.fit_transform(df[['age','salary']])Handling Outliers

Q1 = df['age'].quantile(0.25)

Q3 = df['age'].quantile(0.75)

IQR = Q3 - Q1

df_clean = df[(df['age'] >= Q1 - 1.5 * IQR) & (df['age'] <= Q3 + 1.5 * IQR)]Common Mistakes in Data Preprocessing

- Removing too much data

- Over-normalizing

- Ignoring categorical variables

- Forgetting to scale test data

- Treating text as numeric

- Using mean imputation blindly

Tools Used for Preprocessing

Python Libraries

- Pandas

- NumPy

- Scikit-learn

Big Data Tools

- Spark MLlib

- Databricks

GUI Tools

- RapidMiner

- WEKA

Summary

Lecture 3 covered the complete process of Data Preprocessing including data cleaning, integration, transformation, reduction, and real-world applications. You learned why preprocessing is essential and how to handle missing values, outliers, noise, and inconsistencies. Practical case studies and Python examples showed how preprocessing prepares data for accurate mining and machine learning.

Lecture 4 – Association Rule Mining Apriori, FP-Growth, Support, Confidence & Lift

People also ask:

To convert raw data into a clean, consistent, and usable format for mining and modeling.

A scaling method that brings numeric values into a range, usually 0–1.

Using mean, median, mode, forward-fill, or predictive algorithms.

Combining multiple sources into one consistent dataset.

Outliers can distort results and reduce model accuracy.