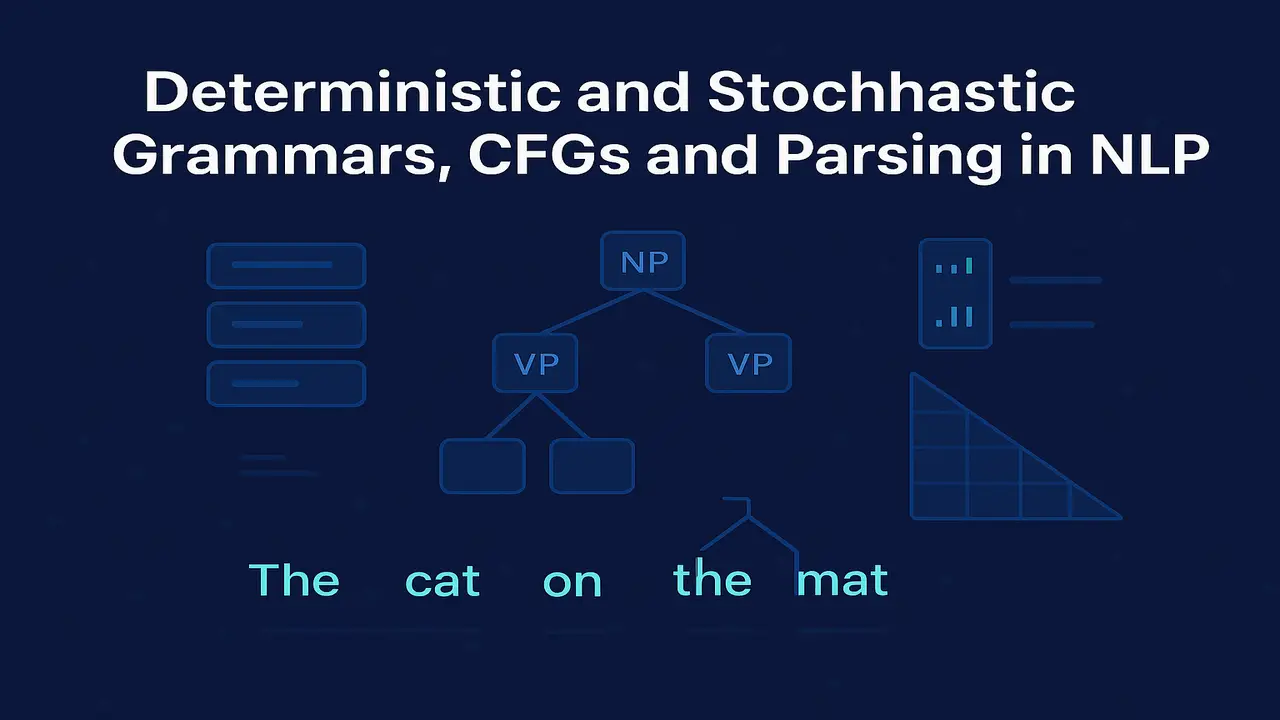

Language is not just a bag of words. The way words are ordered and grouped gives sentences their structure and meaning. Natural Language Processing systems must therefore understand more than isolated tokens. they need at least a partial grasp of syntax. Grammars and parsing algorithms provide a formal way to describe and analyse this structure.

In classical NLP, grammars based on Context Free Grammar (CFG) formalisms were used to capture possible sentence structures, while parsing algorithms built trees that showed how a sentence can be derived from the grammar. Later, probabilistic or stochastic grammars were introduced to prefer some parses over others, addressing the huge ambiguity present in natural language.

This lecture introduces CFGs, explains the difference between deterministic and stochastic grammars, and gives an overview of important parsing algorithms for natural language. The goal is to build a clear intuition for how syntax is represented and processed in NLP systems.

Why grammars and parsing matter in NLP ?

Many tasks benefit from knowing how a sentence is structured.

- Question answering systems often need to identify the subject, object and modifiers in a query to locate the correct answer.

- Information extraction relies on syntactic cues to recognise relations such as “person works for organisation” or “drug treats disease”.

- Machine translation systems use syntactic structure to choose correct word order and agreement in the target language.

- Text summarisation and semantic role labelling often use parse trees as a foundation.

A grammar provides a compact set of rules that define which sentences are allowed in a language and how they can be analyzed into phrases. Parsing applies these rules to a specific sentence to build one or more parse trees.

Context Free Grammars

A Context Free Grammar consists of:

- A set of nonterminal symbols such as S (sentence), NP (noun phrase) and VP (verb phrase).

- A set of terminal symbols, which are the actual words or word categories.

- A special start symbol, usually S.

- A set of production rules of the form A becomes α, where A is a nonterminal and α is a sequence of terminals and nonterminals.

For example, a tiny CFG for simple English sentences might include rules such as:

S → NP VP

NP → Det N

VP → V NP

Det → “the”

N → “cat”

N → “dog”

V → “chased”

Using these rules the sentence “the dog chased the cat” can be derived step by step from S and represented as a parse tree where S branches into NP and VP, and each phrase expands according to the grammar.

CFGs are powerful enough to capture many core syntactic patterns of natural language, including recursion such as nested relative clauses or coordinated structures. At the same time, they are restricted enough to admit efficient parsing algorithms.

Deterministic grammars and deterministic parsing

A grammar or parser is called deterministic when, for any given input and processing strategy, there is essentially only one action to take at each step. This often requires carefully designed grammars that avoid ambiguity under a chosen parsing method.

In programming languages, deterministic parsing is common. Grammars are engineered to be LL(1) or LR(1), meaning that the next production to use can be chosen based on a single lookahead symbol. Compilers rely on this determinism to parse code quickly and unambiguously.

Natural language, however, is inherently ambiguous. For example, the sentence:

“I saw the man with the telescope.”

can be parsed in at least two ways:

- The person has the telescope.

- The man being seen has the telescope.

Any reasonable grammar for English will allow both structures. Purely deterministic parsing cannot easily capture this richness without losing generality.

Nevertheless, deterministic parsing ideas are still useful. Many practical parsers try to follow a single best parse as they scan the input (for example in shift–reduce parsers), using heuristics or probabilities to choose among alternatives rather than exploring all possibilities equally.

Stochastic grammars and Probabilistic CFGs

To handle ambiguity, grammars can be enriched with probabilities. A Probabilistic Context Free Grammar (PCFG) associates each production rule with a probability. The probability of a complete parse tree is the product of the probabilities of all the rules used in that derivation.

For example, a nonterminal PP (prepositional phrase) might have two rules:

- PP → P NP with probability 0.8

- PP → PP PP with probability 0.2

If a parse of a sentence uses the first rule twice and the second rule once, those probabilities contribute to the overall probability of the tree.

Given a sentence with multiple possible parse trees, the preferred analysis is usually the one with highest probability under the PCFG. This formalises the intuition that some structures are more common or natural than others.

PCFGs are a particular example of stochastic grammars. More complex models introduce lexical sensitivity, dependency structures or latent annotations to better reflect real language patterns, but the basic idea remains the same: probabilities guide the choice between competing syntactic analyses.

Parsing algorithms for CFGs

To use grammars in NLP, we need algorithms that can build parse trees for input sentences. Several classic algorithms are widely studied.

- Top-down recursive descent parsing. starting from the start symbol S and expanding according to grammar rules, trying to match the input words left to right.

- Bottom-up shift–reduce parsing. starting from the words and successively combining them into larger phrases using grammar rules.

- Chart parsing algorithms such as CKY (Cocke–Kasami–Younger) and Earley parsing, which use dynamic programming to record partial parses and avoid redundant work.

Chart parsers are especially important in NLP because they can systematically handle ambiguity, sharing substructures among many possible parse trees in a dynamic programming chart. When probabilities are added, these algorithms can be adapted to compute the most probable parse efficiently.

The full online manuscript of Speech and Language Processing provides broader background on grammars, parsing and other NLP topics.

Ambiguity and parse forests

Natural language sentences are often highly ambiguous under CFGs. A simple sentence of eight or ten words may have dozens or even hundreds of valid parse trees. It is usually impractical to list all of them explicitly.

Chart parsers solve this by storing a compact parse forest. Each entry in the chart records that a certain nonterminal can span a particular substring of the sentence, possibly with multiple ways to derive it. The parser can later extract a single best tree or a small number of top trees, depending on the application.

Probabilistic grammars help by providing a scoring mechanism. While many parses may exist, typically only a few have reasonable probability, and the most probable one often aligns with human intuition.

Lecture 5 – Hidden Markov Models and POS Tagging in Natural Language Processing

Step by step outline of the CKY parsing algorithm

The CKY algorithm is a well known bottom-up dynamic programming parser for CFGs that are in Chomsky Normal Form (CNF). In CNF, each production rule is either of the form A → B C or A → w, where A, B and C are nonterminals and w is a terminal.

A high level CKY procedure is:

Step 1. Convert the grammar to CNF if necessary.

Step 2. Let the sentence length be n. Create a triangular table where cell (i, j) will store the set of nonterminals that can derive the substring from position i to j.

Step 3. For each position i in the sentence, look up all rules A → w where w is the word at position i. Add A to cell (i, i). This initialises the diagonal of the table.

Step 4. For spans of length 2 up to n, do the following:

a. For a span from i to j, consider all possible split points k between i and j.

b. For every rule A → B C, if B occurs in cell (i, k) and C occurs in cell (k+1, j), then A can derive the entire span from i to j. Add A to cell (i, j).

Step 5. After filling all spans, if the start symbol S appears in cell (1, n), then the sentence is in the language of the grammar, and parse trees can be recovered by remembering which rules were used at each step.

For PCFGs, CKY can be adapted to keep not just sets of nonterminals but probabilities of the best derivations for each nonterminal over each span, choosing higher probability combinations when multiple options are available.

Real world examples of parsing in NLP

Several practical scenarios show the impact of syntactic parsing.

Example one. Information extraction in news. An organisation wants to automatically build a database of “company acquires company” events from business news articles. By parsing sentences, the system can identify the main clause, subject and object of verbs like “acquired” or “bought” and thus extract structured relations with much higher precision than pattern matching alone.

Example two. Grammar checking and writing assistance. Modern writing tools often highlight subject–verb agreement errors or misplaced modifiers. These tools rely on parse trees or dependency structures to detect non-standard patterns in sentence structure.

Example three. Question answering. When a user asks “Which city hosts the annual tech conference?”, the system can parse the question to recognise “Which city” as the requested entity type and “hosts the annual tech conference” as the predicate, then search for cities that satisfy this relation in a knowledge base or text corpus.

Example four. Machine translation. When translating between languages with different word orders, parse trees help a system align source phrases to appropriate positions in the target sentence and ensure agreement between verbs and subjects.

In modern neural systems, explicit parse trees may be used less frequently, but syntactic knowledge still plays a role, either directly through syntactic features or indirectly through pretraining on parsing tasks.

Python code example using NLTK CFG and parser

Python libraries such as NLTK provide convenient tools for experimenting with CFGs and parsing. The following example builds a tiny grammar and parses a sentence into a tree.

import nltk

from nltk import CFG

from nltk.parse import ChartParser

# Define a small CFG

grammar = CFG.fromstring("""

S -> NP VP

NP -> Det N

VP -> V NP

Det -> 'the'

N -> 'dog' | 'cat'

V -> 'chased'

""")

# Create a chart parser

parser = ChartParser(grammar)

sentence = "the dog chased the cat".split()

for tree in parser.parse(sentence):

print(tree)

tree.pretty_print()

break # show only first treeThis code defines a CFG in a declarative format, constructs a chart parser and then parses a simple sentence. Students can modify the grammar rules, add ambiguity, and see how the parser responds. For PCFG experiments, NLTK also supports probabilistic grammars and Viterbi style parsing.

Summary

Grammars and parsing provide a systematic way to model sentence structure in Natural Language Processing. Context Free Grammars describe how phrases and sentences can be built from smaller units, while parsing algorithms turn individual sentences into explicit parse trees. Because natural language is highly ambiguous, probabilistic or stochastic grammars are often used to prefer more natural structures over less likely ones.

Deterministic ideas from formal language theory show how carefully designed grammars can support efficient parsing, but real language requires richer, often probabilistic models. Chart parsers such as CKY and Earley demonstrate how dynamic programming can explore many possible structures while sharing work across them. These concepts form a bridge between symbolic approaches to syntax and modern statistical or neural methods.

Next. Lecture 7 – Representing Meaning and Semantic Roles in NLP.

People also ask:

A Context Free Grammar is a formal system of production rules that describes how sentences can be generated from nonterminal symbols and terminals. It is widely used in NLP to model basic phrase structure and to support syntactic parsing.

Deterministic grammars are designed so that a parser can choose one unique rule or action at each step, often used for programming languages. Stochastic grammars assign probabilities to rules so that multiple parses are possible, but some are preferred because they are more probable in real language use.

A Probabilistic Context Free Grammar is a CFG in which each production rule has an associated probability. The probability of a parse tree is the product of its rule probabilities. PCFGs allow a parser to choose the most likely parse when a sentence is structurally ambiguous.

The CKY algorithm is a dynamic programming method for parsing sentences with CFGs in Chomsky Normal Form. It fills a triangular chart with all nonterminals that can derive each substring of the sentence and can be extended to compute the most probable parse under a PCFG.

Even though many modern NLP models learn patterns directly from raw text, syntactic information remains useful. Parse trees provide interpretable structure, improve certain downstream tasks and are sometimes used to train or evaluate neural models. Understanding grammars and parsing also builds a strong theoretical foundation in NLP.