Sentiment analysis is one of the most popular and widely deployed applications of Natural Language Processing. Whenever a company wants to measure customer satisfaction from reviews, a political analyst wants to monitor public opinion on social media or a product team wants to track user reactions after a new feature launch, sentiment analysis is usually the first tool they consider.

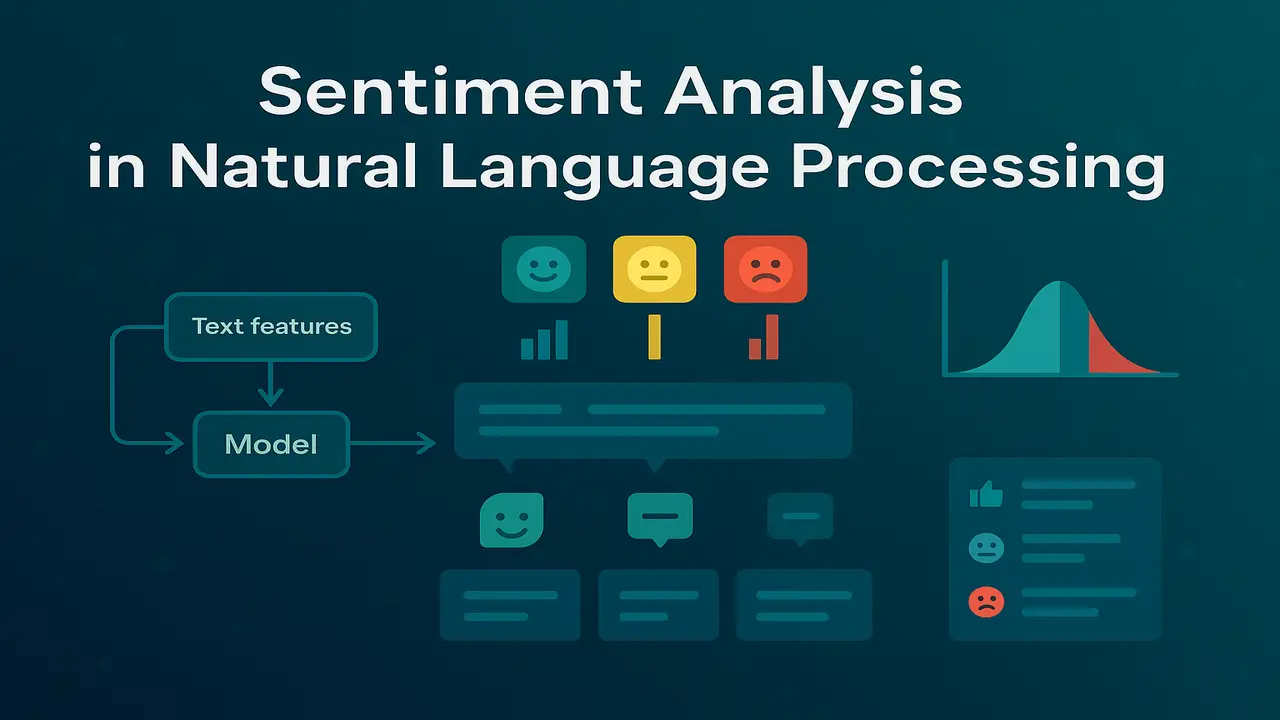

At its core, sentiment analysis is a text classification problem. The goal is to take a piece of text, such as a tweet, a product review or a support ticket, and automatically determine whether the expressed opinion is positive, negative, neutral or sometimes more fine grained. This lecture introduces the main ideas behind sentiment analysis, starting from basic lexicon based methods and moving towards supervised machine learning and deep learning approaches.

The aim is to connect high level intuition with practical algorithms so that you can both understand the theory and implement working systems for real data.

What is sentiment analysis

Sentiment analysis, sometimes called opinion mining, is the task of automatically detecting opinion, emotion or attitude expressed in text. In its simplest form, this means classifying text into three classes. positive, negative or neutral.

However, many real systems use richer schemes.

- Five star scales such as very negative, negative, neutral, positive and very positive.

- Aspect based sentiment where sentiment is detected separately for different aspects of a product. For example, “The camera is great but the battery is terrible” has positive sentiment for camera and negative for battery.

- Emotion classification where the goal is to recognise specific emotions such as joy, anger, sadness or fear rather than simple polarity.

Despite this variety, the core idea remains the same. map unstructured text into structured labels that describe how the writer feels.

Typical sentiment analysis pipeline

A standard sentiment analysis pipeline consists of several stages.

First, text collection. The system gathers data from relevant sources such as product reviews, app store comments, support tickets, tweets or survey responses.

Second, preprocessing. Basic text processing techniques are applied. lowercasing, tokenization, optional stopword removal and normalization of URLs, mentions and emojis. For social media data, handling hashtags and informal spelling can be important.

Third, feature extraction or representation. Traditional approaches represent text using bag of words, n grams or TF–IDF features. Modern approaches use word embeddings or contextualized representations produced by transformer models.

Fourth, model training. A classifier is trained using labelled examples where each text has a known sentiment label. Algorithms range from Naive Bayes and logistic regression to Support Vector Machines and neural networks.

Fifth, evaluation and deployment. The model is evaluated using held out data and metrics such as accuracy, precision, recall and F1 score. Once performance is acceptable, the system is deployed to analyze incoming text in real time or batch mode.

Understanding each stage helps when building or debugging a sentiment analysis system.

Lexicon based sentiment analysis

Before machine learning based approaches became dominant, many systems used lexicon based methods. A sentiment lexicon is a dictionary of words annotated with polarity scores. positive, negative or sometimes numeric strength values. For example, “excellent” might have a high positive score, while “terrible” has a strong negative score.

In a simple lexicon based method.

- The text is tokenized.

- Each token is looked up in the lexicon.

- Positive scores are added, negative scores are subtracted.

- If the final sum is above a threshold, the text is classified as positive. If below, as negative. Values near zero can be labelled neutral.

Lexicon based methods have some advantages. They are easy to understand, require no labelled training data and can work reasonably well for short, opinion rich texts. There are publicly available lexicons for many languages, such as SentiWordNet and various social media specific lexicons.

However, they also have serious limitations.

- They ignore context. “not good” is negative but contains the positive word “good”.

- They struggle with sarcasm and irony. “Great, my phone died again.” is negative despite the positive word “Great”.

- They cannot adapt easily to domain specific usage. For example, “sick” can be positive in some slang contexts.

For these reasons, modern sentiment analysis usually relies on supervised machine learning, often using lexicons as additional features rather than the sole signal.

Supervised sentiment classification

In supervised sentiment analysis, we assume access to a labelled dataset where each text is annotated with a sentiment label. Examples include movie review datasets, product review corpora or manually labelled social media posts.

The key steps are.

- Represent each text as a feature vector. For traditional models this might be a bag of words or n gram TF–IDF vector. For deep learning models it can be a sequence of word embeddings or transformer token embeddings.

- Choose a classification algorithm. Naive Bayes, logistic regression, linear SVMs, convolutional neural networks, recurrent networks and transformer based models like BERT are all possible choices.

- Train the model on the labelled data by minimizing a loss function that measures the difference between predicted and true labels.

- Evaluate the trained model on a validation or test set using metrics such as accuracy and F1 score.

For many practical tasks, simple linear models such as logistic regression or SVMs with n gram features already give strong performance, especially when the training data is large and the domain is stable.

Deep learning models become particularly useful when dealing with complex language phenomena, long documents or multiple languages, or when you want a single pretrained model that can be fine tuned on relatively small domain specific datasets.

Lecture 7 – Representing Meaning and Semantic Roles in Natural Language Processing

Real world examples of sentiment analysis

Sentiment analysis is widely used in industry and research. A few illustrative scenarios are.

First, brand monitoring. A company wants to know how customers feel about its latest product launch. By collecting tweets that mention the product name and running them through a sentiment classifier, the company can visualize the ratio of positive to negative mentions over time and quickly react to emerging problems.

Second, customer feedback triage. An online service receives thousands of support tickets every day. Sentiment analysis can automatically flag highly negative messages so that support staff can prioritize urgent cases, while neutral or positive messages can follow standard queues.

Third, product improvement. By analyzing reviews on e commerce platforms, a team can detect which aspects of a product receive consistent praise or complaints. Aspect based sentiment analysis can highlight patterns such as “battery life often negative, camera often positive” for a smartphone.

Fourth, financial analysis. Some trading systems use sentiment analysis on news articles and social media to estimate market mood toward companies or assets, although this must be handled carefully to avoid overfitting and noise.

These examples show how converting subjective opinions into numeric scores and structured labels enables large scale analysis that would be impossible manually.

For quick rule-based sentiment analysis tailored to social media text, explore the VADER sentiment analysis toolkit.

A simple sentiment analysis algorithm step by step

A basic supervised sentiment classifier using bag of words and logistic regression can be described in simple steps.

Step 1. Collect a labelled dataset of texts with sentiment labels, for example positive and negative movie reviews.

Step 2. Preprocess each text. lowercase, tokenize and optionally remove very common stopwords that carry little sentiment information.

Step 3. Build a vocabulary of all words that occur frequently enough across the corpus. Assign each word an index.

Step 4. Represent each text as a feature vector. For bag of words, the i th component of the vector is the count of the i th vocabulary word in that text. TF–IDF weighting can be used instead of raw counts to downweight very frequent words.

Step 5. Split the data into training and test sets. For example, use eighty percent for training and twenty percent for testing.

Step 6. Train a logistic regression classifier using the training vectors and their labels. The optimization algorithm will learn a weight for each feature that indicates how strongly that word contributes to positive or negative sentiment.

Step 7. For evaluation, transform the test texts into feature vectors using the same vocabulary and compute predictions using the trained model.

Step 8. Calculate accuracy, precision, recall and F1 score on the test set to assess performance.

Step 9. Optionally, inspect the top positive and negative feature weights to see which words the model considers most indicative of each sentiment class.

This algorithm is simple but forms the foundation of many practical sentiment systems, especially when combined with good preprocessing and sufficient data.

For a classic sentiment lexicon used in many lexicon-based systems, see the SentiWordNet project.

Python style code example with scikit learn

The following example demonstrates a small sentiment analysis pipeline using scikit learn and a linear model. It assumes you have a dataset of texts and labels stored in two lists.

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report, accuracy_score

# Example data (replace with real dataset)

texts = [

"I absolutely love this phone, the camera is amazing!",

"Terrible battery life, I hate this device.",

"It is okay, nothing special but not bad either.",

"Best purchase I have made this year.",

"The screen broke after one week, very disappointed."

]

labels = ["positive", "negative", "neutral", "positive", "negative"]

# Split into train and test

X_train, X_test, y_train, y_test = train_test_split(

texts, labels, test_size=0.4, random_state=42

)

# Convert text to TF-IDF features

vectorizer = TfidfVectorizer(

ngram_range=(1, 2), # unigrams and bigrams

max_features=5000,

min_df=1

)

X_train_vec = vectorizer.fit_transform(X_train)

X_test_vec = vectorizer.transform(X_test)

# Train logistic regression classifier

clf = LogisticRegression(max_iter=1000)

clf.fit(X_train_vec, y_train)

# Evaluate

y_pred = clf.predict(X_test_vec)

print("Accuracy:", accuracy_score(y_test, y_pred))

print(classification_report(y_test, y_pred))

# Predict sentiment of new sentences

new_texts = [

"The update made the app much faster.",

"Customer service was rude and unhelpful."

]

new_vec = vectorizer.transform(new_texts)

print(clf.predict(new_vec))In a classroom or lab, students can replace the toy dataset with a larger corpus, adjust vectorizer settings, compare logistic regression with other classifiers and explore error cases.

Summary

Sentiment analysis demonstrates how Natural Language Processing can turn subjective human opinions into structured data that organizations can analyze at scale. Starting from basic lexicon based methods and extending to supervised machine learning and deep neural models, sentiment systems classify text into polarity classes and sometimes more detailed emotion categories.

A successful sentiment analysis pipeline depends on thoughtful preprocessing, appropriate feature representations and well chosen models. It must also be evaluated carefully because language is subtle, context dependent and often noisy. While transformer based models currently provide the strongest performance, even simple models built on TF–IDF features can deliver practical value for many applications.

Next. Lecture 9 – Temporal Representations and Corpus-Based Methods in NLP.

People also ask:

Sentiment analysis is the automatic detection of opinion or emotion in text. It typically classifies a review, post or comment as positive, negative or neutral, and is widely used for analyzing customer feedback, social media data and survey responses.

A basic model converts text into numeric features using methods such as bag of words or TF–IDF and then trains a classifier like logistic regression or Naive Bayes on labelled examples. The trained model learns which words and phrases are associated with each sentiment class and can then predict sentiment for new texts.

Challenges include handling negation, sarcasm and irony, dealing with domain specific language and slang, recognizing mixed or aspect based sentiment within the same text and coping with noisy social media data. Models must also generalize well across time as language usage changes.

Lexicon based methods rely on dictionaries of positive and negative words and compute a score based on their presence in text. Machine learning based methods learn sentiment patterns from labelled data and often achieve higher accuracy, especially when context and complex expressions are important.

Yes. Transformer based models such as BERT or domain specific variants can capture rich contextual information and subtle patterns in language. Fine tuning these models on sentiment datasets usually yields state of the art performance and can handle nuances that simpler models miss.