Natural language is deeply tied to time and to real usage in communities. When people describe events, they specify when something happened, how long it lasted and how different events relate in time. At the same time, they learn and use language from exposure to many examples in context. Modern Natural Language Processing therefore needs two complementary capabilities.

First, the ability to represent and extract temporal information from text, so that systems can understand when events occur, how they are ordered and how tense and aspect contribute to meaning. Second, the ability to learn from large corpora, using statistical patterns, collocations and distributional information to model how language is actually used.

This lecture introduces both sides. temporal representations and corpus based methods. You will see how temporal expressions and event relations are represented, and how corpora can be used to discover frequencies, collocations and distributional patterns that drive many NLP models.

Temporal information in language

Everyday language is full of temporal expressions.

Examples.

The meeting is tomorrow at three pm.

He worked there from two thousand fifteen to two thousand nineteen.

By the time we arrived, the show had already started.

These sentences contain explicit time expressions such as tomorrow and two thousand fifteen, as well as temporal relations between events. Temporal information comes from several sources.

- Temporal expressions such as dates, times, durations and deictic terms like today, yesterday and last week.

- Verb tense and aspect, which indicate whether an event is past, present, ongoing, completed or habitual.

- Discourse connectives, such as before, after, during and while, which relate different events in time.

To build systems that can answer questions like “What happened before the surgery?” or construct timelines from news articles, we need structured temporal representations that make these relationships explicit.

Representing temporal information

Temporal representations in NLP usually aim to capture three kinds of entities.

- Time expressions or timexes, such as dates, times and durations.

- Events, which are situations described by verbs or nominalisations.

- Temporal relations between events and times, such as before, after, includes or simultaneous.

Frameworks such as TimeML define annotation schemes for marking up temporal expressions, events and temporal links in text. A time expression like “June 15, 2023” is normalised to a canonical format, while an event like “launched” is linked to the relevant time and to other events via temporal relations.

Temporal representations may treat time as.

- Points on a timeline. useful for instantaneous events like an announcement.

- Intervals. useful for extended situations like working on a project or staying in a hospital.

By representing events and times explicitly, systems can reason about order, overlap and duration, and can support applications such as timeline construction, clinical record analysis and historical document interpretation.

Temporal information extraction

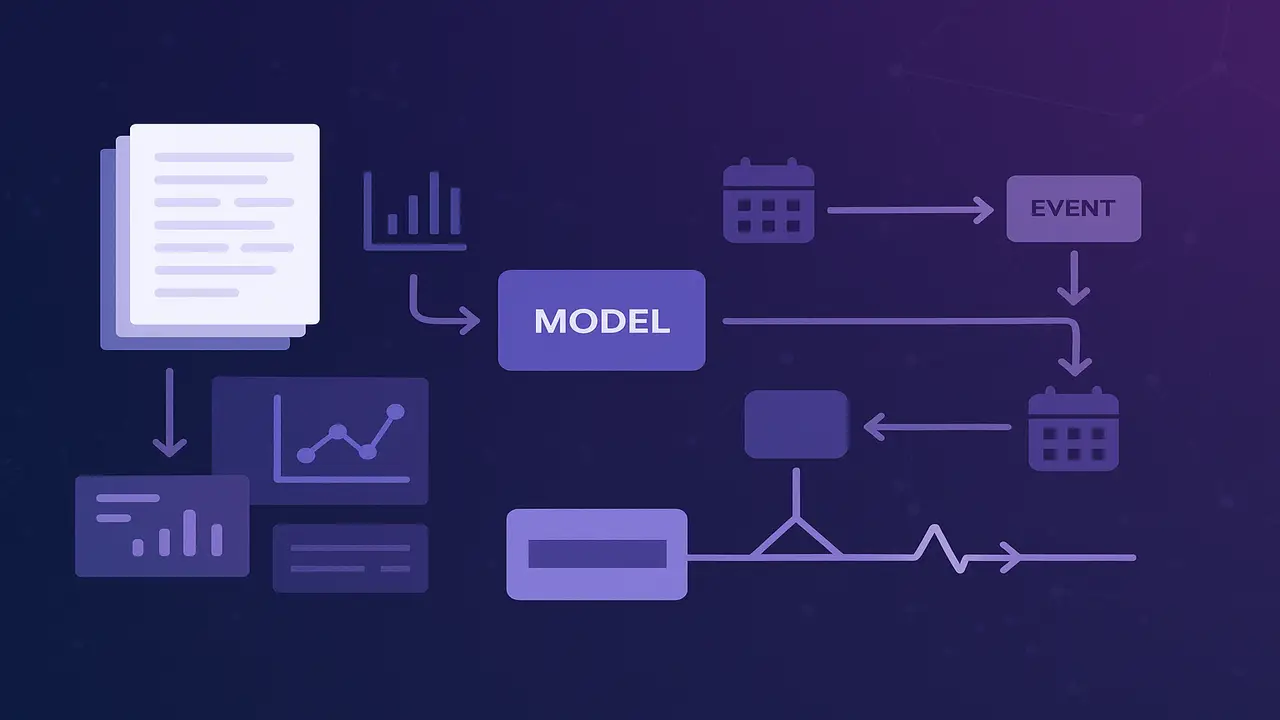

Temporal information extraction is the process of automatically identifying temporal expressions, events and temporal relations from text. A typical pipeline consists of several stages.

- Temporal expression recognition and normalisation. detect strings like “next Friday” or “three weeks ago” and convert them into normalised representations anchored to a reference time. Systems such as HeidelTime and SUTime use rule based patterns and lexicons to achieve high quality recognition and normalisation.

- Event detection. identify words or phrases that describe events, often focusing on verb predicates or nominalised events.

- Relation extraction. determine temporal relations between events, and between events and time expressions, such as before, after, overlaps and includes.

For example, consider the sentence.

“On March fifth, the company announced the new product and started shipping it two weeks later.”

A temporal information extraction system might produce.

- Time one. March fifth, two thousand twenty four.

- Time two. March nineteenth, two thousand twenty four, derived as two weeks after March fifth.

- Event one. announced, anchored to Time one.

- Event two. started shipping, anchored to Time two.

- Relation. Event one before Event two.

Such structured output enables timeline charts, temporal queries and integration with reasoning systems.

Corpus based methods in NLP

While temporal representations capture structure in individual documents, corpus based methods look at patterns across very large collections of text. A corpus is a large organised collection of texts, often with metadata and linguistic annotation. Corpus linguistics and corpus based NLP study language empirically by examining frequencies, collocations and patterns in such data.

Key ideas in corpus based methods include.

- Frequency. counting how often words, phrases or constructions occur. This helps identify core vocabulary and domain specific terms.

- Concordance. displaying multiple occurrences of a word or phrase in context, typically in key word in context format, to help analyse usage.

- Collocation. words that occur together more often than expected by chance. For example, strong tea and make a decision are typical collocations in English.

- Distributional semantics. representing words by distributions of their contexts and using vector space models to capture semantic similarity.

Corpus based methods underlie many modern NLP models. For example, language models, embeddings and statistical parsers all rely on counts and patterns extracted from large corpora.

For a gentle introduction to corpus linguistics and corpus based language studies, see this unit from Lancaster University.

Collocation and corpus based statistics

Collocations are a central concept in corpus based analysis. They are word pairs or phrases that co occur significantly more often than would be expected if words were combined randomly. Examples include heavy rain, commit a crime and high probability.

To detect collocations automatically, corpus linguists use statistical measures such as.

- Pointwise mutual information, which compares the probability of two words occurring together to the product of their individual probabilities.

- t score or z score, which assess whether co occurrence frequency deviates significantly from expected frequency under independence.

These measures highlight pairs that form strong associations. Collocations are important for lexicography, language teaching, machine translation and natural text generation, because they reflect conventional ways of expressing ideas.

Temporal corpora and diachronic analysis

Corpus based methods can also be applied to temporal aspects of language use. Diachronic corpora, which contain texts from different time periods, allow researchers to track changes in word usage, collocations and grammar over time.

For example, one can examine how the collocates of a verb like grasp change across decades, or how new temporal expressions such as lockdown or pandemic era appear and spread in news corpora. Temporal corpora thus support both linguistic research and practical tasks such as detecting emerging terms and shifts in sentiment over time.

Lecture 8 – Sentiment Analysis in Natural Language Processing

Step by step algorithm. simple collocation extraction

A straightforward corpus based algorithm for finding collocations near a target word can be described as follows.

Step 1. Choose a target word W for which you want to study collocations, for example risk.

Step 2. Collect a large corpus of texts in the relevant domain and language.

Step 3. Tokenize each text and optionally normalize case and punctuation.

Step 4. For each occurrence of W, record the words that appear within a fixed window around it, for example two words to the left and two words to the right.

Step 5. Count the frequency of each candidate collocate C that appears near W.

Step 6. Also compute the overall frequency of W, the overall frequency of C and the total number of tokens in the corpus.

Step 7. Compute a collocation statistic such as pointwise mutual information between W and C based on their joint and marginal probabilities. High PMI indicates a strong association.

Step 8. Rank collocates by the chosen statistic and inspect the top results. These will often correspond to meaningful collocations such as take a risk, reduce risk and risk factor.

This algorithm can be extended to multiword collocations, different window sizes and more advanced association measures, but the basic idea remains the same.

Python code example. corpus counts and simple collocations

The following simple example illustrates how to compute word frequencies and bigram collocations from a small corpus using Python. In practice, you would replace the sample texts with a much larger collection.

from collections import Counter

import math

corpus = [

"The company reported strong growth last year",

"The company expects strong growth next year",

"There is a strong risk of delay this year",

"Investors see strong potential but also some risk",

]

# Tokenize very simply (for teaching purposes)

def tokenize(text):

return text.lower().split()

tokens = []

for doc in corpus:

tokens.extend(tokenize(doc))

# Unigram and bigram counts

unigram_counts = Counter(tokens)

bigram_counts = Counter(zip(tokens, tokens[1:]))

N = len(tokens)

def pmi(w1, w2):

joint = bigram_counts[(w1, w2)]

if joint == 0:

return 0.0

p_w1 = unigram_counts[w1] / N

p_w2 = unigram_counts[w2] / N

p_w1w2 = joint / (N - 1)

return math.log2(p_w1w2 / (p_w1 * p_w2))

target = "strong"

candidates = []

for (w1, w2), count in bigram_counts.items():

if w1 == target:

score = pmi(w1, w2)

candidates.append((w2, count, round(score, 3)))

# Sort by PMI score

candidates.sort(key=lambda x: x[2], reverse=True)

for word, count, score in candidates:

print(f"{target} {word} -> count={count}, PMI={score}")Students can extend this code to.

- Use a real corpus, such as news articles, and observe collocations like strong evidence, strong demand and strong support.

- Change the target word and compare collocational patterns.

- Replace bigrams with window based co occurrences to capture less strictly adjacent collocations.

A similar procedure can be applied to temporal expressions, for example tracking how often certain years or date expressions co occur with specific events or domains.

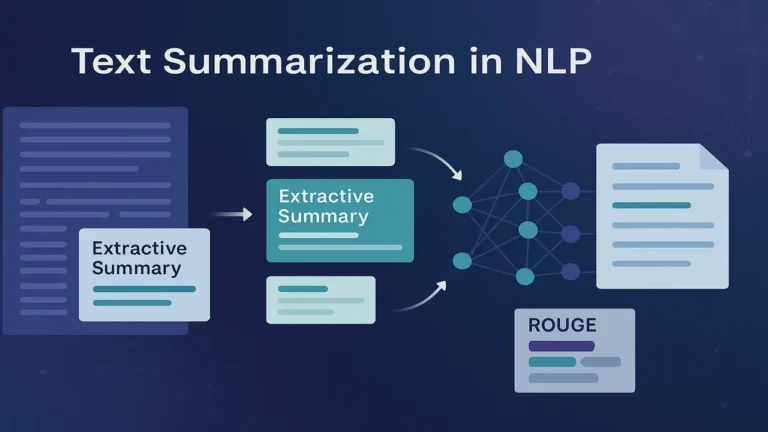

Summary

Temporal representations and corpus based methods give Natural Language Processing systems two essential capabilities. understanding when events happen and understanding how language is used in real data. Temporal information extraction builds structured timelines from raw text, allowing systems to handle questions about order, duration and temporal relations. Corpus based methods, meanwhile, reveal patterns of frequency, collocation and distributional similarity that underpin language models and many statistical techniques.

Together, these approaches move NLP beyond isolated sentences toward richer models that respect both time and usage. Temporal corpora allow researchers and practitioners to study how language and events evolve, while temporal representations within documents support applications ranging from clinical record analysis to news timeline construction.

Next. Lecture 10 – Information Retrieval and Vector Space Model in NLP.

People also ask:

Temporal representations are structured descriptions of time related information in text, including time expressions, events and the temporal relations between them. They allow systems to build timelines, answer questions about when events occurred and reason about temporal order.

Temporal information extraction is the task of automatically identifying temporal expressions, events and temporal relations in text. It typically involves temporal tagging and normalization, event detection and relation classification.

Corpus based methods analyze large collections of texts to discover patterns such as word frequencies, collocations and distributional similarities. These methods form the empirical foundation of many statistical and neural NLP models.

A collocation is a pair or group of words that frequently occur together, such as strong tea or heavy rain. Collocations are important for lexicography, language teaching and natural language generation because they reflect typical and natural usage.

Temporal representations provide structured information about time within individual documents, while corpus based methods reveal large scale usage patterns across many documents. Together they support applications such as timeline construction, event analysis over time and distributional modelling of temporal language.